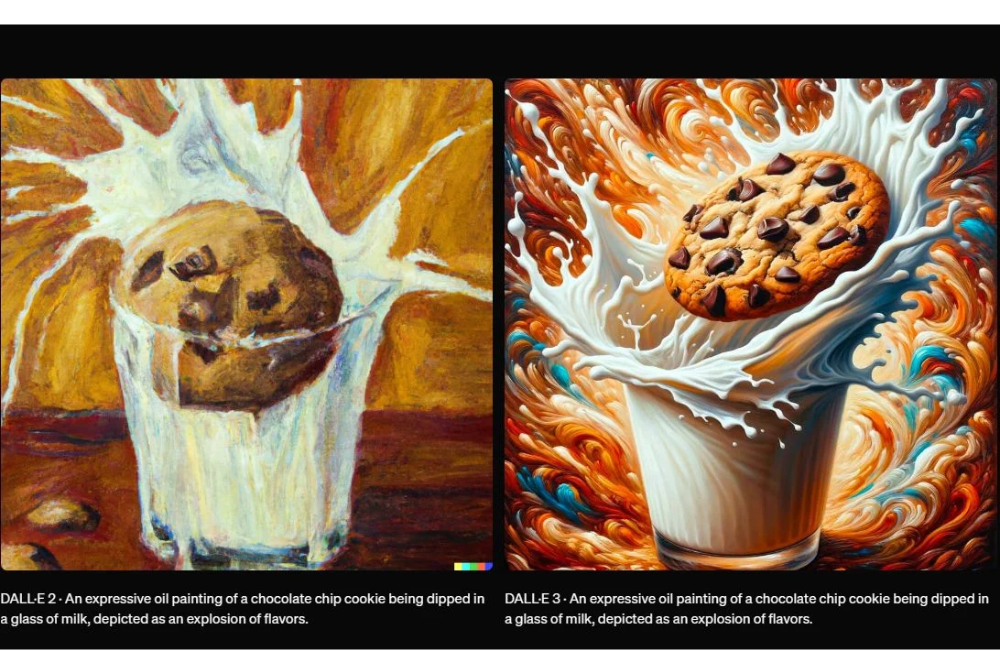

OpenAI, the organization behind ChatGPT, announced on Tuesday the launch of a new tool capable of detecting images created by its text-to-image generator, DALL·E 3.

The decision to develop and release this software comes amid growing concerns about the role of AI-generated content in this year’s global elections, including the prominent use of such content to spread misinformation.

The tool, backed by Microsoft, has shown in internal testing to correctly identify images created by DALL·E 3 approximately 98% of the time and is able to withstand common image alterations like compression, cropping, and changes in saturation.

Additionally, OpenAI is implementing tamper-resistant watermarking to help authenticate digital content such as photos and audio, making these watermarks difficult to remove. In an effort to establish more robust standards for media provenance, OpenAI has joined an industry coalition that includes tech giants like Google, Microsoft, and Adobe.

To further address the existing global challenges, OpenAI, in collaboration with Microsoft, is initiating a $2 million “societal resilience” fund aimed at bolstering AI education and awareness. This initiative reflects a growing recognition of the need to equip societies with the knowledge to navigate the complexities introduced by advanced AI technologies.

Written by Alius Noreika

English

English French

French Spanish

Spanish German

German Dutch

Dutch Italian

Italian Danish

Danish Portuguese

Portuguese Greek

Greek Russian

Russian Swedish

Swedish Bulgarian

Bulgarian Hungarian

Hungarian Catalan

Catalan Ukrainian

Ukrainian Polish

Polish Basque

Basque Chinese (Simplified)

Chinese (Simplified) Japanese

Japanese Hebrew

Hebrew Arabic

Arabic Swahili

Swahili Amharic

Amharic Irish

Irish Afrikaans

Afrikaans Albanian

Albanian Armenian

Armenian Azerbaijani

Azerbaijani Belarusian

Belarusian Bengali

Bengali Bosnian

Bosnian Cebuano

Cebuano Chichewa

Chichewa Chinese (Traditional)

Chinese (Traditional) Corsican

Corsican Croatian

Croatian Czech

Czech Esperanto

Esperanto Estonian

Estonian Filipino

Filipino Finnish

Finnish Frisian

Frisian Galician

Galician Georgian

Georgian Gujarati

Gujarati Haitian Creole

Haitian Creole Hausa

Hausa Hawaiian

Hawaiian Hindi

Hindi Hmong

Hmong Icelandic

Icelandic Igbo

Igbo Indonesian

Indonesian Javanese

Javanese Kannada

Kannada Kazakh

Kazakh Khmer

Khmer Korean

Korean Kurdish (Kurmanji)

Kurdish (Kurmanji) Kyrgyz

Kyrgyz Lao

Lao Latin

Latin Latvian

Latvian Lithuanian

Lithuanian Luxembourgish

Luxembourgish Macedonian

Macedonian Malagasy

Malagasy Malay

Malay Malayalam

Malayalam Maltese

Maltese Maori

Maori Marathi

Marathi Mongolian

Mongolian Myanmar (Burmese)

Myanmar (Burmese) Nepali

Nepali Norwegian

Norwegian Pashto

Pashto Persian

Persian Punjabi

Punjabi Romanian

Romanian Samoan

Samoan Scottish Gaelic

Scottish Gaelic Serbian

Serbian Sesotho

Sesotho Shona

Shona Sindhi

Sindhi Sinhala

Sinhala Slovak

Slovak Slovenian

Slovenian Somali

Somali Sundanese

Sundanese Tajik

Tajik Tamil

Tamil Telugu

Telugu Thai

Thai Turkish

Turkish Urdu

Urdu Uzbek

Uzbek Vietnamese

Vietnamese Welsh

Welsh Xhosa

Xhosa Yiddish

Yiddish Yoruba

Yoruba Zulu

Zulu